Ever since Apple announced ARKit, a new framework that allows for creating augmented reality experiences for iPhones and iPads, back in June, the tech community started guessing what will be the answer from other big market players. And on August 29th, Google has finally reacted. They announced a new tool to match ARKit - AR Core - with an ambition to launch AR experiences on 100M devices, starting with Pixel, Pixel XL, and Samsung’s S8 (with 7.0 Nougat and above).

While both development kits are designed to produce pretty similar experiences, the software is still different. Our technology enthusiasts could not miss such an event, and today, I will be briefly looking at the features and capacities of both frameworks. Also, I'll explore the marketing opportunities for both platforms, ARKit and ARCore comparison and the possible future for augmented reality technology. The race is on, let's begin.

Technologies & Features

ARCore utilizes three main technologies to bring virtual content to life. These are:

- Motion Tracking that helps applications understands the position of the smartphone in space.

- Environmental Recognition that allows Android devices to identify the flat objects in the area using the camera view and display the virtual ones on top of them under the right angle, for instance, a stage or a table.

- Lighting Estimation technology helps the smartphone evaluate the current lighting quality.

Moreover, ARCore, as any AR technology uses real objects as anchors for virtual ones to ensure the stable performance whenever the user changes the pose.

ARCore is integrated not only with Android studio but also with mediators like Unreal Engine and Unity. You can also deploy augmented reality for the web using ARCore.

ARKit, on the other hand, also uses Lighting Estimation and Environmental Recognition (which they call “Scene Understanding”) to help iPhones and iPads detect the size and location of horizontal objects near the viewer and the extent of light accessible in a scene. Another interesting technology that ARKit introduces is Visual Inertial Odometry (VIO). This feature essentially does the same thing as Environmental Recognition, however, with greater accuracy and without any need to calibrate it. It recognizes the way the device moves within the environment, including speed, curve, angle, etc. using the device's sensors. ARKit is also integrated with third-party rendering engines such as Unity and Unreal Engine and claims to be extremely optimized to run smoothly on high-performance hardware.

As you can see, the technology behind these two frameworks is pretty similar. However, in our personal opinion, ARKit is better optimized and prepared for the heavy loads of curious developers. Moreover, based on the demos released earlier this summer, ARKit seems to be much better in terms of graphics quality, which is another advantage that tilts the scales in their direction.

A Deeper Dive into the Technology Behind

In the ARKit vs. ARCore overview, we must pay specific attention to a technology behind these platforms to see the difference between them. Let’s dig deeper and understand what features each platform has and how they both work.

ARKit

Technically, ARKit uses VIO, I talked about previously, to track the position of the user (and the iPhone or iPad, of course) in the real-world space; in other words, every second the device scans, calculates, and refreshes your topography up to 30+ times. The estimation is carried out by two systems independently that help the technology to ensure higher accuracy. The visual system (which is actually a set of device's cameras) tracks the position by matching real-world unique elements to the pixels displayed in a camera view each frame.

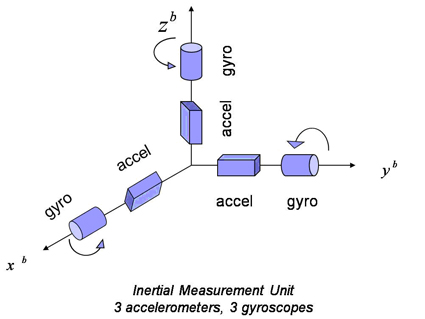

On the other hand, the inertial system, also referred to as Inertial Measurement Unit (IMU) is a set of sensors which every Apple's device has, provides the feedback from gyroscope that reacts to changes in the angles of device's orientation and accelerometer that tracks its turns in space. The information from both systems then proceeds with Kalman filtering, an algorithm that determinates what system provides a more accurate estimation of the device's real position (= Ground Truth). After that, the changes successfully publish in the ARKit.

A huge step forward with VIO is that IMU measurements are performed approximately 1000 or more times per second and are based on device's motions. The process of data reckoning is used to calculate device's movement between the measurements. It is pretty much an accurate guess when the position of the device is calculated based on the previously determined position or the advancing of this position based on known movements in time. However, this system also has flaws and the more time passes between the updates from a visual system, the less accurate the position will be, compared to a Ground Truth.

Visual assessment is usually done at 30FPS which is a standard frame rate for the camera. Optical systems have their own issues and do not measure the distance well is you stand long enough from the object. The great news is that the flaws of each system are turned into strengths when the VIO works as a whole. That means that the camera can cover the scene with no or very few unique features (for instance, a unicolor table or a wall), while inertial tracking system can measure the position of the device more accurately based on its movements. Alternatively, when the device is still and the inertial system has very few data, the visual system can carry the load. Here is when Kalman filter comes into the game and chooses the best result to ensure the precise tracking.

Another interesting feature worth attention is plane detection. This helps ARKit to identify a solid surface where the framework can display the content. Otherwise, augmented content will be floating in space violating the laws of physics which is ridiculous. ARKit determines the plane based on the feature points caught by the optical system. An average user can experience the plane detection by seeing the little dots on the screen when running the application.

ARCore

ARCore, on thу other hand, utilizes quite a similar process to recognize the position of the smartphone relatively to the real world. Google chose concurrent odometry and mapping, or COM for short, to gather the necessary information. Basically, the technology perceives the unique elements of the object called feature points to adjust the device's location. Similarly to ARKit, ARCore combines visual information that comes from cameras with device's IMU (accelerometer and gyroscope) to estimate the position and orientation of the device in space and time. In this way rendered 3D content will be overlayed on top of the physical world making it look like the augmented content is a part of the real world.

ARCore recognizes feature points of any horizontal surface (it could be a table, an image or a tile on the pavement) and makes them a plane to display the 3D content. As a standard augmented reality framework, ARCore may experience problems when detecting plane objects without a proper texture and contrast, for instance, a white wall or a black floor.

To increase the level of realism, ARCore detects the intensity of lighting in the scene by analyzing the view given by the visual system (camera). The framework then displays the 3D content with the same amount of light as the real objects in the world.

Moreover, poses changes as you interact with the application and ARCore ensures that your experience will not be ruined by incorrect displaying of the 3D objects. Whenever you choose to augment the real world with any virtual content, you need to define the anchor for the content, so ARCore can track the position more accurately. Also, it allows for stabilizing the content in regard to the real world, even if the device moves.

Documentation & Demos

On Apple's website, developers can download the beta versions of iOS11 and Xcode 9 that contain augmented reality SDK and try to build AR applications before the official release. Also, everyone has unlimited access to the detailed documentation that introduces ARKit and explains the fundamental concepts (what is AR technology, how it works, how to use it and build for it, how to place virtual objects, how to work with interactive content, etc.). Moreover, Apple professionals encourage developers to share their newly-built apps with the community and the world by offering an additional promotion to the most interesting applications.

Google's official website now contains the fundamental explanation of ARCore and an overview of the technology and its features. Also, it has detailed guides on how to get started building for AR on Android studio, Unreal Engine, Unity, and web platforms. The website also contains the link to downloading Google ARCore SDK.

Market Potential

Google might have a few advantages when entering the AR market, because they have been working with this technology for much longer. Even considering the great failure with Google Glass and the long road ahead for the Tango project, the company has had its finger in the AR pie for quite a while. However, with the iOS 11 release set for the next few days, ARKit seems to enter the market first, which gives Apple a huge benefit to bite off a bigger piece of the market.

Here, it is pretty hard to estimate which company will show the better results — only time will show the difference. ARKit will soon be available on many more devices since it also includes tablets, while ARCore is designed for the few of its Android brothers. While Apple and Google chose a similar, but yet a quite different approach, Apple may have a more successful start. But in a long-term perspective, Google's AR can grow bigger because the market share of Android devices is four times more than iOS.

ARKit vs. ARCore Conclusions

- Both companies have chosen the right way to improve their software and integrated augmented reality SDKs into it, meaning the technology will be much more available to the mass audience. Google even took it one step further and is trying to popularize AR for web browsers.

- The technology will hit the average users who download educational, communication, and gis applications (pretty much everyone except gamers). AR still has a lot of work to do to prove its existence over the gaming sector after everyone went crazy for Pokemon Go.

- While Google was mainly focused on mobile VR at the beginning of the year, Apple worked hard to make AR a mainstream mobile technology, and it seems that the company has done much more work and promotion to let this happen.

So, it appears to be that the killer app will likely hit iOS first, which will allow Apple to seize the market and set their own game rules and Google will have to catch up. Augmented reality had just begun – get some popcorn and make yourself comfortable - it will be a long ride.