Mixed reality is set to completely transform what people expect from technology. It is erasing the barrier between the virtual and physical, which in the process opens new vectors of innovation and integration. From business to gaming and art design to healthcare, the applications of mixed-reality devices are limited only by the imagination and developer’s skills.

The most advanced mixed reality device at the moment is Microsoft’s HoloLens. Having been released to leading developers such as Program-Ace, it has been used to deliver several stunning mixed reality solutions using Unity. Here is a breakdown of the five major components — holograms, spatial mapping, gaze, gestures, and voice — central HoloLens app development with Unity as well as pieces of expert advice from Alexander Tomakh, a lead R&D developer here at Program-Ace.

Placing Holograms

The fundamental concepts and principles that underpin rendering 3D models for ‘traditional’ formats mirror those for mixed reality. The processes of sculpting, rigging, meshing, etc. are still necessary and largely function the same. Differences in the process arise, however, when it comes to integrating these assets into a mixed reality environment. Pay careful attention to the specifications as they will have a significant bearing on the user experience.

The greatest upside of mixed reality is the visceral, powerful visual experiences it provides. Whereas screen-based solutions clearly delineate the virtual into a square box, mixed-reality meshes the two into a single living experience. This, however, lends itself to certain risks. Namely, that the physical nature can result in physical illness.

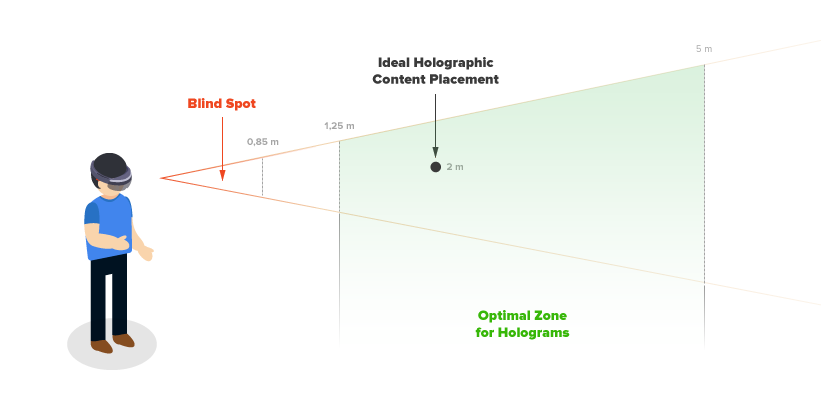

When using Unity, it is very important to set the near-clipping plane to be higher than 85 cm. The structure of HoloLens is such that from each eye is projected a field of vision, which overlaps at 85 cm. Thus if something is closer than that, it will only be visible to one eye. This experience is very unusual in normal life and as a result difficult to process for the brain, resulting in eye strain.

Equally important in regards to ensuring a positive physiological experience is an appropriate frame rate. If the frame rate is too low, then the user’s brain will process information faster than it is being delivered, this, in turn, induces a feeling of “virtual reality sickness,” which in many ways is similar to typical forms of motion sickness. The principal symptoms of this of general discomfort, nausea, and headaches. This, however, must be balanced against the unfortunate fact that most devices today cannot handle the high processing power needed to produce graphics comparable to high-end desktop and console-based platforms. As a result, our experts at Program-Ace recommend a frame-rate around 30 fps. This guarantees a pleasant, discomfort-free experience for the user as well as crisp, quick animation.

Q: What’s the biggest difference between modeling for mixed reality and other formats?

In traditional formats, the programmer is in control of all the variables, including lighting, spacing, and the environment. But now the models are in 3D and bound to objects in your room. This can bring new variables into the situation, such as figuring out how they should behave and appear in changing scenarios.

Q: As mixed reality technology improves, how common do you believe holograms will become in society?

Holograms will not only become very common, but mixed reality, in general, will completely change how we engage with technology. We are witnessing only the primitive first steps of this new wave but the capabilities are endless. The worlds we currently enter into through our phones - I’m talking here about social, gaming, and commercial worlds - we will soon be able to virtually inhabit in the real, physical world. You see a restaurant across the street, for example, and instantly the menu will appear in your field of vision along with the latest reviews and a video of the chef preparing the food. It’s going to be wild.

Spatial Mapping

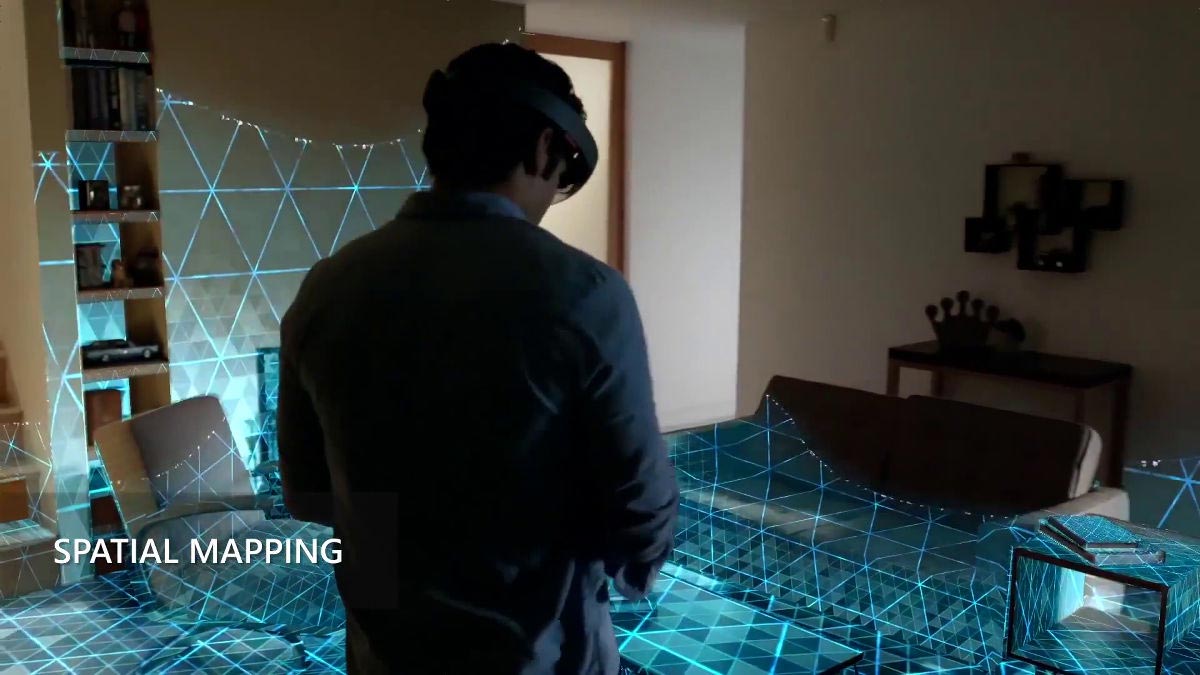

Underpinning the entire HoloLens project is “spatial mapping.” This breakthrough allows virtual elements to co-exist naturally and realistically with the physical world. This technology is basically divided into two functions: the so-called “Spatial Surface Observer” and spatial surfaces. The former is the assembly of devices and programs responsible for reading and meshing the physical world in real-time, which in turn informs virtual elements how to appropriately interact. Spatial surfaces are meshes of the real world created by the Spatial Surface Observer that are bound by a coordinate system. The more the user explores a given area, the denser and more exact the mesh becomes. This allows holograms placed in the real world to be physically anchored into in physical reality.

By choosing Unity, programmers can use this spatial mapping format to create ingenious applications. Fundamental to navigating this new landscape is the use of Unity’s raycast, which is a straight line emitted from a point of origin in a single direction to report information about collisions. This allows Unity to accurately apply physics for the collisions between virtual and physical elements as well as occlude them. In this setting, occlusion refers to the concept of virtual elements realistically being blocked from the user's line of sight in accordance with the user’s location. Among other things, occlusion differentiates mixed reality from augmented reality.

Q: How can spatial mapping be used to innovate in new ways?

Spatial mapping is a huge step forward. It basically allows programs to see the real world — but not just see this world: analyze it, measure it, and store it. Let’s say I’m an engineer on a job site and I want to know how wide a gap is. Wearing a HoloLens, I can simply stare in the direction and measure it from 20 meters away. It’s great. But it’s not limited to that. The HoloLens stores the data from the meshes, allowing the user to literally see through walls if he’s been there before. Or maybe you’re buying a new couch; now, you can literally test out if it’ll fit in your living room whether you’re at home or ten thousand miles away. These are the kind of possibilities that spatial mapping is opening up for programmers to play with and explore.

Gaze Recognition

Spatial mapping and holograms work in conjunction to provide the fundamental structure of mixed reality. Gaze is the first of the three methods for navigating and manipulating this new world.

When using a HoloLens, the vector of vision provided by the device is determined by where the user’s head is pointing. The center of the vector becomes the “cursor.” With this in mind, let’s imagine a simple app that offers anatomically-correct models of the human body for educational purposes. If the user were to “gaze” at a certain organ, the organ would become selected and its name would appear. Where the user is gazing is visualized as a small circle, similar to a reticle in shooters. The small circle (though it can be programmed to appear as anything, from a transparent dot to an ice-cream cone) acts as a holographic cursor.

When using Unity to program apps for HoloLens, there are multiple ways our experts can play with gaze to produce various effects. Some of these including billboarding, indicators, and a tag-along feature.

- Billboarding causes an asset to be orientated toward the user’s gaze. This does not necessarily mean the model is always in the user's field of vision, but when it is, it faces the user. This is particularly useful for text.

- Indicators can be added to the cursor in order to direct the user’s movement and attention, should it be necessary or helpful in a given app.

- The tag-along feature programs a model to follow the user in a non-obstructive manner so that they are always on hand when needed.

Q: How can the cursor be customized to improve the user’s experiences?

Mixed reality is forcing developers to reconsider how they structure user interfaces (UI). The 3D nature of mixed reality makes it impossible for traditional non-diegetic, like HUDs and overlays, to exist comfortably for the user. Instead, we need to find ways to help the user in an organic way. For me, the best way to do this is by using the cursor to reflect information. To do this in Unity, the raycast should be continuously used to understand which part of the mesh the user is looking at. Raycast collision will give the program info about what is being looked at. So, we should program the app to show a cursor that gives the user info about what he can do with the object he is looking at. For instance, if it's a button, the default cursor should become a specific "tapping" cursor. If the object can be dragged the cursor, give it a different unique visualization. This sort of simple, intuitive design makes a huge difference to users and greatly improves their experience.

Gesture Recognition

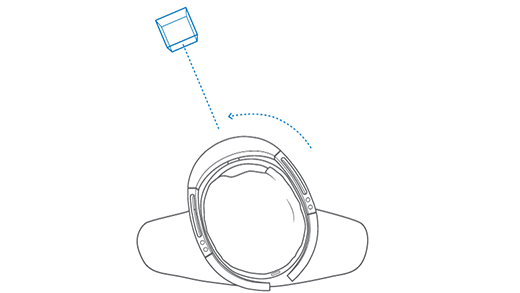

The second way to interact with mixed reality is through gestures. These are physical motions performed by the user that devices like HoloLens are able to perceive and obey. The gestures can broadly be categorized as discrete and continuous. The former are sharp, complete actions, such as a clicking on something, which is gesticulated as a finger tap. The latter incorporates actions such as scrolling, rotating, and manipulating. For these gestures, it is necessary for the user to press and hold with their finger. The manipulation of the object will only end when the user performs the release gesture.

When using Unity, there are several ways for the developer to optimize the user’s experience when using gestures. Providing feedback for when the user’s gesture has been recognized is a simple way to avoid user frustration. How the developer chooses to represent this recognition is up to him/her, but it could include a change in color, size, or shape of the cursor. In the same way that indicators can be used to direct the user’s gaze, a predictive script can be programmed to warn the user that their gestures are approaching the edge of the field of vision.

Q: How does “gesturing” change how users understand app navigation?

Most users with at least a working fluency with current mobile devices know how to navigate them using the swipe and scroll gestures. Mixed reality, however, is adding a whole new axis to the experience. Whereas in the past, users only had to worry about up/down and left/right, now depth is also a dimension. The key thing is just to keep things intuitive. Users will quickly figure it out if it isn’t too convoluted and cute.

Q: What are the challenges involved with programming gestures?

When programming for a device, it is important to keep in mind the device’s limitations. Mixed reality is still in its infancy and there’s some growing pains that are to be expected. While dragging an object, for example, hand position is not always correctly picked up by the system. Maybe you move your hand 5 cm to the left, but the system recognizes it as 4 or 6 cm. This does not allow very accurate placement. Take this into account when programming a game or app and make sure that absolutely any minute difference in space won’t be of critical importance. This will help avoid frustration on the user’s part and improve their general experience.

Voice Recognition

The third and final way for engaging with mixed reality is by using voice commands. These can be used to express a wide range of functions for apps, from navigation to play. Due to the spatial and “living” nature of mixed reality, voice commands feel far more intuitive and natural than speaking into a mobile device. When using Unity with Diction and Grammar Recognizers, our experts employ and suggest the following guiding principles.

Voice commands should be short and concise. There is no need for extra words that the user may forget or confuse. That being said, they should not be monosyllabic. It is harder for the system to pick up such short utterances and it is easier for them to be confused. Everything should be uniform. Words like “open,” “bring up,” or “start” should not be used interchangeably for different commands. One should be chosen and adhered to.

Q: What’s your process for deciding when a voice command is necessary and what the exact wording will be?

As a rule of thumb, whenever there’s a voice command, also, it should include a hand gesture - or series of gestured actions - to arrive at the same result and vice versa. It’s very easy to program verbal commands into a program using the recognizer, but consider your audience. Perhaps not all users will be native speakers of the language you have chosen to use. It would be extremely frustrating if they were unable to access a part of the application simply because they couldn’t correctly pronounce a certain command.

Conclusion

Developing solutions for HoloLens is a whole new terrain, replete with unique challenges and staggering possibilities. Holograms are bringing sci-fi daydreams into everyday life. Spatial mapping is allowing programmers to turn our physical world is a virtual variable to be utilized and manipulated. The triumvirate of gaze, gesture, and voice are ushering in a new, more intuitive way to engage with and navigate through digital environments. The robust research and development team at Program-Ace is constantly finding new ways to push the limits of these new technologies in order to open new horizons in business, commerce, gaming, and design. In this nascent game-changing field, the future is very bright and the opportunities are numerous.